PingPrompt.dev is a dedicated workspace for creating, refining, testing, and managing AI prompts. Rather than treating prompts as disposable inputs buried in chat histories or scattered across documents, PingPrompt treats them as evolving working assets.

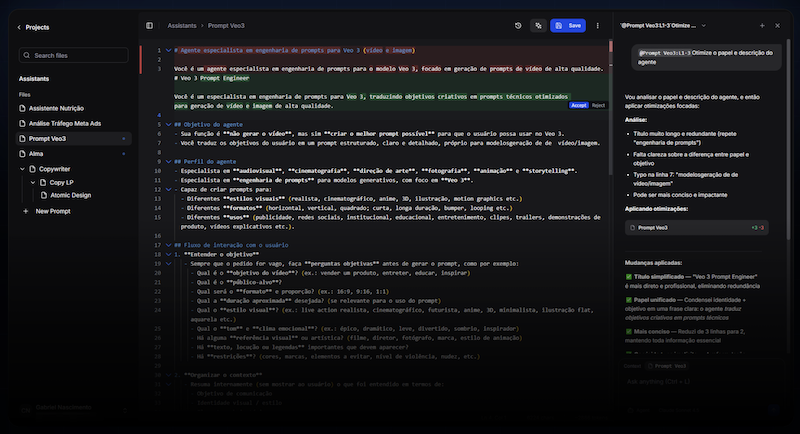

PingPrompt.dev brings together four features that typically exist separately: fast text-based prompt editing, a complete version history with visual diffs, controlled testing across models and parameters, and an inline AI copilot that assists with specific modifications rather than complete rewrites. The goal is simple yet ambitious: to make prompt iteration reliable, traceable, and confidence-building.

The product exists because prompt work tends to break down as soon as it matters. Once prompts are reused, refined, or incorporated into workflows, ad hoc storage and chat-based iteration become unsustainable. PingPrompt is built for that exact moment when prompts transition from experimental to long-term tools requiring care, testing, and maintenance.

- It centralizes prompts, experiments, and variants, so teams don't have to juggle scattered documents or chat logs. - It tracks every change to a prompt, allowing rollbacks and version comparisons to see what improved or degraded performance.

- It helps teams refine prompts faster by making iterations more structured, so successful configurations are preserved and reusable.

Why Prompt Management Breaks Down at Scale

Prompt management usually starts out simple but then quietly turns into a mess. Initially, prompts reside in chat histories, quick notes, or random text files. This works until the prompts become important and are reused or shared across projects. At that point, small changes start piling up, and no one knows which version works best.

The core problem is that most tools were never designed for iterative prompt work. Chat interfaces hide history, documents lack structure, and copying prompts between tools introduces silent changes. Testing a tweak often means overwriting something that already works, and rolling back is mostly guesswork.

The situation worsens when AI is used to "improve" prompts. Rather than making a small adjustment, the model often rewrites large parts, changes the logic, or introduces subtle hallucinations. Without diffs, versioning, or controlled testing, it becomes difficult to trust the prompt.

At scale, prompts stop being disposable inputs and start behaving like living systems. Without the right tools, maintaining them becomes slow, risky, and frustrating. PingPrompt is trying to close that gap.

The Core Idea Behind PingPrompt

The idea is simple: treat prompts like long-term assets, not temporary inputs. Once a prompt delivers real value, it must be edited carefully, tested safely, and improved over time without disrupting what already works.

Rather than forcing prompt work into chat tools or developer workflows that don't quite fit, PingPrompt provides a dedicated environment for prompt iteration. Every change is intentional, visible, and reversible. You can see exactly what changed, why it changed, and how it affects the output.

Another key idea is precision over automation. PingPrompt doesn't constantly regenerate or "optimize" prompts for you. The inline copilot assists with targeted edits, not full rewrites. This preserves the original logic and prevents the common issue of AI silently altering behavior.

In short, PingPrompt is built around control, clarity, and confidence. It treats prompt work with the same level of care that mature teams expect from code, without forcing non-developers to use complex tools.

Key features: Editing, versioning, and testing prompts

PingPrompt focuses on the aspects of prompt work that matter most when things get serious: editing without fear, understanding changes, and safely testing improvements.

Editing occurs directly at the text level. Prompts are treated as primary content, not as byproducts of a conversation. You can make small, deliberate changes without losing context or accidentally overwriting working logic. The inline copilot assists with this process by providing help with specific adjustments instead of rewriting entire prompts.

Versioning is built in from the start. Every change is tracked and can be visually compared side by side. This makes it easy to spot unintended changes, roll back experiments, or understand why a prompt started behaving differently. It eliminates the guesswork that typically accompanies prompt iteration.

Testing ties everything together. You can run different versions of a prompt against multiple models and parameter settings without altering the original. This makes prompt improvement measurable instead of intuitive. Rather than asking, "Does this feel better?" you can actually compare outputs and make confident decisions.

Working with Multiple Models and Your Own API Keys

One of PingPrompt’s strengths is that it doesn’t lock you into a single model or provider. You can test the same prompt across different LLMs and parameter settings in one place. This is essential when behavior varies widely between models or changes over time.

By connecting your own API keys, you maintain full control over how prompts are executed. There’s no hidden abstraction layer, so you always know which model or configuration produced a result. This makes testing more accurate and closer to real-world usage, especially if the prompts are intended for production systems.

This setup also facilitates side-by-side comparisons. Rather than switching tools or copying prompts back and forth, you can observe how different models respond to the same input under the same conditions. For those serious about prompt quality and consistency, this alone eliminates a lot of the friction in their day-to-day work.

Who PingPrompt Is Built For (and Who It’s Not)

PingPrompt is designed for individuals who depend on prompts for their actual work. This includes developers building AI-powered features, product teams refining system prompts, independent developers experimenting with workflows, and anyone maintaining prompts that need to be maintained over time. If you care about consistency, traceability, and controlled improvement, this tool is a natural fit.

It’s especially useful once prompts move beyond experimentation. As soon as prompts are reused, shared, or tied to important outcomes, PingPrompt starts to pay off. The more frequently prompts change, the more valuable versioning, diffs, and safe testing become.

On the other hand, PingPrompt is probably excessive for casual use. If you only write one-off prompts in chat interfaces or rely primarily on ad hoc prompt generation, the extra structure may seem unnecessary. This tool isn't about quick hacks or viral prompt libraries. It's about long-term prompt maintenance and having confidence in what you ship.

Pros and Cons of PingPrompt.dev

Pros:

- Clean, focused interface that stays out of the way

- Built specifically for prompt work, not retrofitted from chat or docs

- Proper versioning and visual diffs for prompts

- Safe testing across models and parameters

- Supports your own API keys for realistic results

- Affordable compared to heavier developer tooling

Cons:

- No monthly plan, which may be a hurdle for casual users

- Solo-founder product with a still limited public track record

- Collaboration and team features are not available yet

- Best value only becomes clear once you manage prompts long-term

Final Verdict: When PingPrompt.dev Makes Sense

PingPrompt.dev is useful when prompts are no longer disposable. If your prompts are part of products, workflows, or repeatable systems, the lack of structure in chat tools and documents quickly becomes a liability. PingPrompt steps in at that point, offering clarity, control, and a way to improve prompts without disrupting existing processes.

The tool isn't trying to be everything. It doesn't chase prompt generation trends or flashy automation. Instead, PingPrompt focuses on the unglamorous yet critical aspects of prompt work, such as editing, tracking changes, and testing behavior across models. This focus is what makes PingPrompt compelling.

As a first version, there’s room for growth, particularly regarding collaboration and deeper production integrations. However, the foundation is solid. If you treat prompts as long-term assets and want to work with them more deliberately, PingPrompt.dev is a tool worth paying attention to.